NEWSLETTER

MSCA COFUND 2019: CO-FINANCING OF DOCTORAL AND FELLOWSHIP PROGRAMMES

The MSCA COFUND is a Horizon 2020 instrument for co-funding of ongoing or planned programmes to promote PhD students or post-docs. Therese Lindahl, the Austrian National Contact Point for Marie Skłodowska-Curie Actions, tells us more about it.

Talking about her role, Lindahl explains: “I am in charge of raising awareness about participation possibilities among potential COFUND applicants, to give advice and support in the proposal writing phase as well as during the implementation of funded programmes.”

The purpose of the COFUND scheme is to foster excellence in researchers' training, mobility and career development, thereby spreading the best practices of the Marie Skłodowska-Curie actions. “This will be achieved by co-funding new or existing programmes that provide international, intersectoral and interdisciplinary research training of researchers at all stages of their career,” adds Lindahl. COFUND can take the form of doctoral or fellowship programmes, which are expected to enhance research and innovation related human resources on a regional, national or international level.

WHO CAN APPLY?

“Legal entities in EU Member States or Horizon 2020 Associated Countries, that fund or implement doctoral or fellowship programmes for researchers are eligible to apply,” confirms Lindahl. COFUND is a mono-beneficiary action which means there must be one sole beneficiary that will also be responsible for the availability of the necessary complementary funds. “Nevertheless, collaboration with a wider set of partner organisations, including organisations from the non-academic sector, will be positively taken into account,” adds Lindahl. These partner organisations can contribute with innovative and interdisciplinary elements, for example through the hosting of secondments and providing complementary training in research or transferable skills.

Lindahl stresses that, “individual researchers cannot apply for COFUND within the calls of the European Commission but can benefit from COFUND in the way that they apply for positions offered within co-funded programmes”. Lindahl adds: “All COFUND positions must be widely advertised, for example on the EURAXESS job portal for researchers.”

TIPS ON HOW TO APPLY

Those interested in applying for the COFUND scheme, should refer to the Guide for Applicants. Lindahl explains: “This is a guidance document that provides all the necessary information about the funding scheme and takes applicants step by step through the application process.” The Guide also contains information about how applicants should structure the proposal, on the evaluation procedure and where applicants can seek further support. “It is also important to consult related parts of the MSCA Work Programme 2018-2020 in order to understand the policy objectives and overall aims of MSCA in general,” notes Lindahl, who further adds, “all relevant documents can be found on the Funding & tender opportunities portal of the European Commission. It is strongly recommended that applicants get in touch with the MSCA National Contact Point in the country concerned at an early stage of the proposal preparation.”

Additionally, applicants should bear in mind that the description of the research discipline or area makes up only a minor part of the proposal. “Applicants are asked to provide information primarily on administrative issues, for example about the selection process of the researchers to be funded, about appointment conditions and the management of the programme,” emphasises Lindahl. For this reason, people who have that kind of knowledge should be involved when drafting the proposal. Applying organisations must also be aware that, as the name indicates, the MSCA COFUND scheme funds only parts of the total budget for the implementation of the respective programme and that matching funds are needed.

MORE INFORMATION

For detailed information and advice on how to apply, visit Marie Skłodowska-Curie Actions.

THE MCAA NEWSLETTER STAFF

REVOLUTIONISING THE SUBTITLING RESEARCH LANDSCAPE

The Marie Skłodowska-Curie IF project SURE finished in 2018, but its results on subtitling sparked controversy that continues to this day. MSCA research fellow and principal investigator Agnieszka Szarkowska explains.

Subtitling facilitates language learning, linguistic diversity and multilingualism. But to be useful for viewers, subtitles have to meet certain quality criteria. SURE addressed the need for subtitling quality and defined subtitle quality indicators in terms of speed – how fast subtitles appear and disappear – and line breaks – how to best arrange text in a subtitle to be readable.

“We found that when watching content in English, most viewers managed to follow subtitle speeds higher than are now recommended on the market,” says Szarkowska, an associate professor at the University of Warsaw’s Institute of Applied Linguistics. “This has implications on how subtitling is done.”

Research involving eye tracking experiments, questionnaires and semi-structured interviews was carried out at University College London’s Centre for Translation Studies, a leading audiovisual translation institution.

REVISITING OLD TRUTHS, BRINGING SUBTITLING INTO THE 21ST CENTURY

“We know that many people are now able to read faster, compared to the 1980s when most studies on subtitle reading were conducted,” Szarkowska notes. SURE showed the need to update subtitling standards and perform up-to-date research, stressing the importance of replication.

The project will impact end users in various ways. For researchers, it demonstrates the need to do studies on important aspects of audiovisual translation, even if other studies were done in the past. “The field is changing,” comments Szarkowska. For viewers, language service providers and broadcasters, outcomes may translate into better quality subtitles. “For instance, we know what types of line breaks facilitate smoother processing, and it’s up to broadcasters to implement these results so that viewers can benefit from better quality subtitles.”

Results were met with much controversy from some professional subtitlers and researchers. SURE prompted discussions on subtitling speed, findings that continue to be hotly debated.

The essence of interlingual subtitling (translated, as in English to Spanish, not English to English) is text condensation. “You can’t fit in subtitles everything that’s being said for two reasons,” explains Szarkowska. “One is time constraints, people need to be able to read the subtitles, and subtitles need to be synchronised with dialogue. The other is space constraints, there are only 2 lines of text, about 40 characters each. With fast speech rates, condensation is inevitable.”

THE NEED FOR SPEED

“But how much do you need to reduce and condense?” she asks. This depends on many factors, one being the so-called reading speed. This is the pace with which subtitles are displayed on screen. It’s usually measured with characters per second (cps). Traditionally, in many subtitling countries like those in Scandinavia, the reading speed has been low: 10-12 cps. The lower the speed, the more the need to condense.

With the arrival of Netflix, TED and other new players, reading speeds have gone up. “It begs the question: What is the optimum speed?” Szarkowska adds. “This, of course, is impossible to answer, it’s like the holy grail of subtitling. It depends on factors like who the audience is, the genre and topic difficulty.” Overall, many viewers in the study said they thought interlingual subtitles were often “inaccurate” because they didn’t fully reflect what people said. This stems from excessive text condensation.

According to Dr Szarkowska, a lot of viewers, particularly the young, can understand the original English dialogue and compare the text in the subtitles with the original. They can see that a lot has been removed, and many even think too much. The eye tracking study showed that contrary to what is said, many people could comfortably follow the speed of 16 and 20 cps. This goes against most current market practices.

Condensation is necessary in subtitling, but the speeds of 10-12 cps were set in the early 1980s. People read faster today, and Szarkowska’s research gives some evidence of this. “To conclude, I believe the speeds could be raised to 16 cps without harming viewers. On the contrary, many viewers may appreciate it if subtitles were more accurate. However, professionals may take this to mean that subtitles would be translated word for word, which isn’t what they have been doing since they believe subtitling is an art. They have a point of course, and there’s a danger in that, too.”

THE MCAA NEWSLETTER STAFF

A BATTLE FOR SURVIVAL BETWEEN BACTERIAL PATHOGENS AND THEIR VIRUSES IN THE HUMAN BODY

Bacterial viruses (also known as “phages”) are the viruses that infect bacterial strains. Such viruses are amongst the most abundant entities on planet Earth. As phages do not attack human cells, they are being reconsidered as a natural cure against antibiotic-resistant bacterial pathogens.

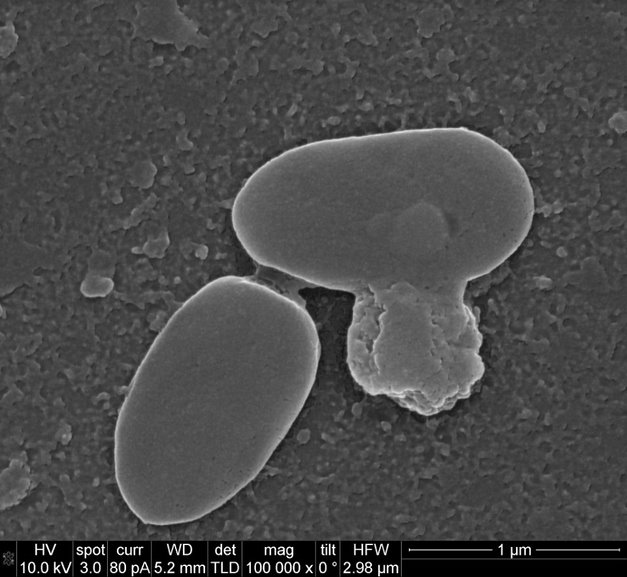

As the life cycle of phages involves a bacterial cell for their production, they influence the microbial world significantly. Because of their unique property of infecting and ultimately killing bacterial species (a process called “lysis”), they are being researched for their lytic potential, or their ability to induce lysis, against many deadly bacterial pathogens (Figure 1). Since their discovery – before antibiotics – bacteriophages have played a significant role in shaping the field of molecular biology and are now being examined anew for their role in what is known as bacteriophage therapy, the application of phages to treat different bacterial infections.

THE NEED FOR BACTERIOPHAGE THERAPY

Considering the global concern over the growing resistance of bacteria against antibiotics, the use of bacteriophages as therapy has received great attention, especially as it appears to offer an excellent way to combat multidrug-resistant (MDR), extensively drug-resistant (XDR) and pan-drug-resistant (PDR) pathogens. Bacterial pathogens may develop resistance to bacteriophages over a period of time due to the presence of immunity provided by CRISPR-Cas (Clustered Regularly Interspaced Short Palindromic Repeats) system, a genetic modification system that provides immunity against foreign genetic materials. A possible method of countering this could be the use of bacteriophages’ lytic enzymes; moreover, there is no evidence that bacterial strains have developed resistance against such enzymes. Hence, it seems that synergistic approaches for targeting such drug-resistant bacterial pathogens can provide the best solution.

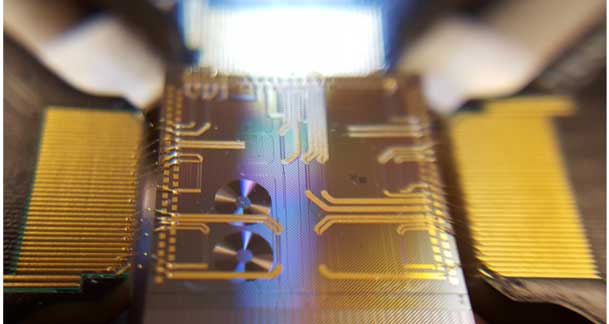

Figure 1:

Representative image of natural bacterial lysis by bacteriophage infection.

There are definitely many advantages of using phages over antibiotics, but a phage-antibiotic synergy seems to be an essential strategy in case of chronic infectious diseases associated with cystic fibrosis. For developing effective phage-using therapy, it is of critical importance to screen for and select phages from different clinical and environmental sources in order to avoid “site-specific evolution” among the phage-microbe community. In addition, such selective phage lytic enzymes can provide an additional advantage to support effective treatment strategies in the near future.

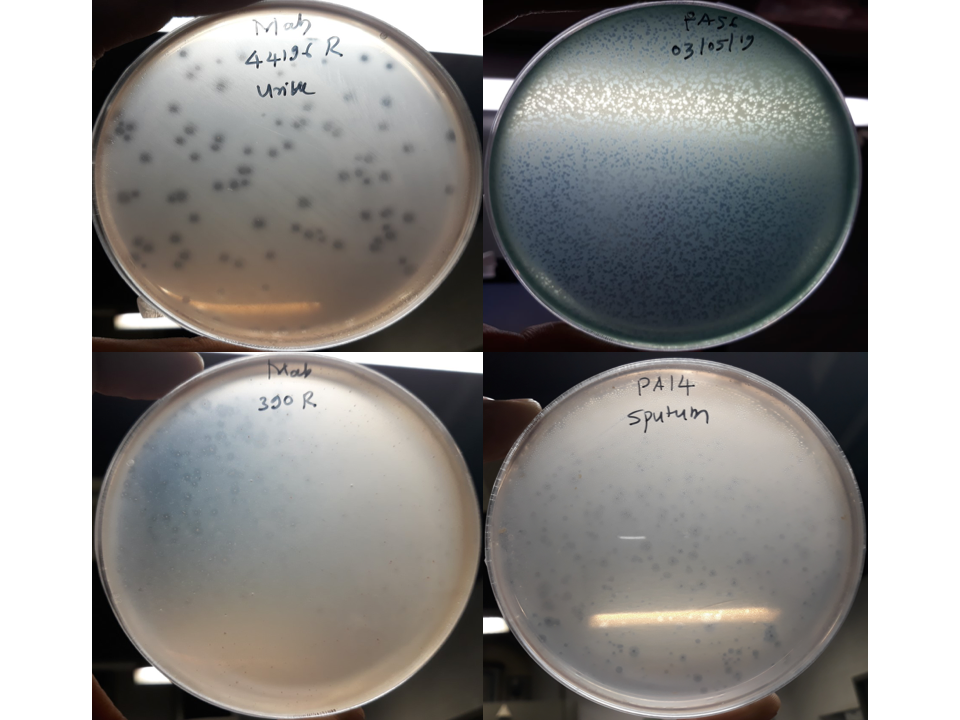

Figure 2:

Representative images for isolated bacteriophages against Mycobacterium abscessesus rough type strains (Mab 44196R from urine and Mab 390R from sewage) and Pseudomonas aeruginosa strains (PA 14 from sputum and PA56 from sewage).

Mutating bacteriophages to target resistant pathogens in humans was recently reported (2 ), clearly indicating their safe applicability. But it is necessary to also consider the environmental impact of such released phages, as this particular application may lead to the co-evolution of the phage-microbe community even further, in unprecedented ways – or as one might describe it, “mutating the mutators”. Likewise, natural phages can evolve and be explored for targeting antibiotic-resistant bacteria. Overall, isolating new lytic phages (Figure 2) is a lot more efficient than modifying one (1).

PROJECT BACKGROUND

In our ongoing project, we are isolating different bacteriophage strains from clinical and environmental samples, and testing them against pathogens isolated from patient samples, targeting health-threatening biofilm-forming microbes, commonly involved in many infections, for their in-vitro and in vivo studies. Bacteriophages seem to be more robust towards previously unencountered bacterial hosts rather than those with which they reside. It was observed during our in vitro bacteriophage therapy studies with Pseudomonas aeruginosa (strains PAO1, P14, P56, PAET1, and PAET2), Mycobacterium abscessesus (strains 390S, 390R, BE96S, BE96R, BE48S, BE48R, 44196S, 44196R, BE03S, BE63S, and BE82S), and Escherichia coli (strains E2348/69, MG1653, LF82, and CFT073) that uses of bacteriophages isolated from different clinical and environmental samples had different outcomes (Figure 2). We tried to isolate phages from clinical samples (sputum, urine, and saliva) of infected patients in order to target biofilm-producing bacteria that cause chronic pulmonary infection leading to cystic fibrosis or chronic obstructive pulmonary disease (COPD).

Surprisingly, we found that phages isolated from clinical sources were less susceptible towards their clinical bacterial counterparts, resulting in less or no plaque-formation through the plaque assay method used to isolate viruses. This technique shows the number of infectious particles, as each virus produces a circle of infected cells called a plaque. On the contrary, the phages isolated from sewage sources where highly virulent towards these hosts, indicating that bacteriophages available at the source of infections probably provide phage immunity through co-evolution (3).

Moreover, the sewage phages which do not share such immunological history end up infecting the pathogens. These findings suggest that phage-host adaptation and evolution is “site-specific” and to encounter highly infective phages against pathogens we must screen different clinical samples and/or environmental samples to get desired results. In our case, enriching the phages isolated from the infection site and their reintroduction also provided a suitable approach for effective phage therapy.

CONCLUSION

We would like to conclude that bacteriophages can be harnessed for their natural antibacterial potential naturally through single or a cocktail of different lytic phages, phage lytic enzymes (1), antibiotics and/or synergistic combinations of them all to overcome the antibiotic resistance (4 ). Also, development of such timely approaches will be helpful to normalise therapeutic treatments for different chronic infections and to successfully develop state-of-theart treatment therapies for antibiotic resistance in the near future.

ACKNOWLEDGEMENT

This work is funded by the European Commission under the Horizon 2020 Marie Skłodowska-Curie Actions COFUND scheme (Grant Agreement No. 712754) and by the Severo Ochoa programme of the Spanish Ministry of Science and Competitiveness [Grant SEV2014-0425 (2019–2021)].

Swapnil Ganesh Sanmukh and Eduard Torrents Serra

Bacterial Infections: Antimicrobial Therapies, Institute for Bioengineering of Catalonia (IBEC), The Institute of Science and Technology, Baldiri Reixac 15-21, 08028, Barcelona, Spain

Correspondence: ssanmukh@ibecbarcelona and etorrents@ibecbarcelona.eu

- K. Abdelkader, H. Gerstmans, A. Saafan, T. Dishisha, Y. Briers, The preclinical and clinical progress of bacteriophages and their lytic enzymes: The parts are easier than the whole. Viruses 11, 96 (2019).

- R. M. Dedrick, C. A. Guerrero-Bustamante, R. A. Garlena, D. A. Russell, K. Ford, K. Harris, K. C. Gilmour, J. Soothill, D. Jacobs-Sera, R. T. Schooley, et al., Engineered bacteriophages for treatment of a patient with a disseminated drug-resistant Mycobacterium abscessesus. Nat. Med. 25, 730-733 (2019).

- B. Koskella, M. A. Brockhurst, Bacteria-phage coevolution as a driver of ecological and evolutionary processes in microbial communities. FEMS Microbiol. Rev. 38, 5, 916-931 (2014).

- A. M. Segall, D. R. Roach, S. A. Strathdee, Stronger together? Perspectives on phage-antibiotic synergy in clinical applications of phage therapy. Curr Opin Microbiol. 18, 51, 46-50 (2019).

NEW USES FOR OLD DRUGS: THE MAGIC OF NANOPARTICLE WRAPPING

Most people in the world have had a friend or a family member who has been diagnosed with or suffered from cancer. This should not come as a surprise: 1.93 million people died from cancer in Europe alone in 2018.

The disease types differ between adults and young people. In adults, the most common types of cancer are lung, colorectal, breast and pancreatic cancer. In children and teenagers, on the other hand, lymphoma, brain cancer and osteosarcoma are most frequent.

Of young people’s cancers, osteosarcoma is a bone tumour disease that affects mainly children and adolescents, with a second peak of incidence showing up in adults over the age of 50. In the former group, it usually occurs in the long bones such as femur and tibia, while in the latter it localises mainly in the skull, jaw and pelvis. Current treatment options for osteosarcoma include surgery and chemotherapy. In surgery, which is preferably used, the tumour is completely removed, if possible. In contrast, chemotherapy is used to reduce the tumour size and eliminate metastatic cells which may spread from bones to other parts of the body, such as lungs. Thanks to the implementation of chemotherapy in the 1970s, the survival rate of osteosarcoma has improved substantially, reaching 65 % in the first 5 years after cancer therapy. However, when it becomes metastatic, spreading from bone to distal tissues of the body, survival rate drops to 20 %. This number has remained unchanged in the last 30 years. That’s why novel approaches are needed to develop new therapies.

Unfortunately, the development of novel drugs from scratch takes a lot of time and money, and osteosarcoma is not so prevalent a disease as to move big pharma companies to assume such a costly effort. There is, however, an alternative that looks more appealing: drug repositioning. This is based on recycling an already available chemotherapeutic agent, from one disease to another. It is a relatively rapid and cost-efficient approach, as much of the preliminary work – such as biocompatibility studies, manufacturing and authorities’ approval – is already done. My PhD project is thus focused on repositioning drugs from other cancer types toward the treatment of paediatric osteosarcoma. But as such an approach is not as simple as screening drugs of other cancer treatments for osteosarcoma, we have introduced another factor: nanotechnology.

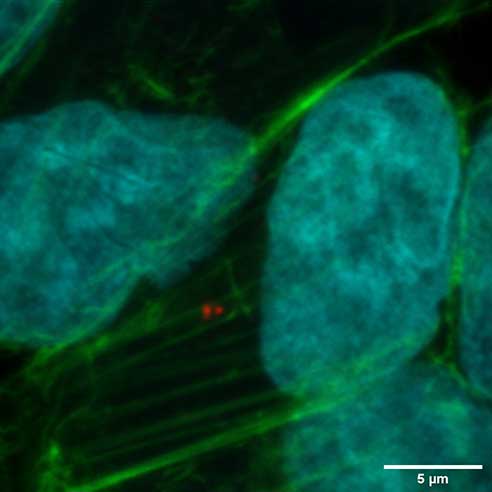

As is well known, the main drawback of chemotherapy is the production of undesirable, serious side effects. Nanotechnology can help ameliorate these repercussions, and we intend to use it as the main focus of our approach. Nanoparticles are tiny atoms or molecules of different materials that have a size span between 1 and 100 nm in diameter (1 nm = 10-9 m). They have been used in human crafts unwittingly for thousands of years. For example, stained glass in the windows of mediaeval cathedrals contains gold or silver nanoparticles that infuse the windows with different colours, depending on particle characteristics such as size and shape.

Recent advancements in nanotechnology have broadened our understanding of nanomaterials and how we can use them to improve the quality of people’s lives. Their use has attracted much attention in cancer treatment, as many nanoparticles are biocompatible and, by acting as vehicles and vectors in drug delivery, may also have the ability to reduce the toxicity and side effects of chemotherapeutic agents. Nanoparticles can be tuned up in order to perform different functions. Their surface can be chemically modified, for example, to direct them specifically toward the tumour site, or at least toward the tissue with the tumour, reducing undesirable side effects in other tissues as well. Surface characteristics can also protect them from the immune system, and help them survive kidney cleansing, thus extending their time in the blood stream.

A particular quality of nanoparticles makes them especially attractive for the delivery of cytotoxic drugs: they can respond to selected stimuli. Nanoparticles can be designed in such a way that they will only release drugs in response to changes in pH, changes in temperature, presence of blue light, or the presence of a magnetic field. This offers the unique possibility, for the

first time in cancer treatment, of directing treatment to destroy the tumour cells and leave others intact. If we focus on one selected stimulus at the tumour site, nanoparticles circulating in the blood stream will guard their toxic cargo until they reach the exact site of the stimulus – that is, the tumour – leaving other tissues safely alone.

In conclusion, nanotechnology can help us reduce dosages and increase the targeted action of cancer drugs, making chemotherapy more efficient and less life-disturbing for the patient. For these reasons, the combination of nanotechnology with drug repositioning is a promising candidate for the treatment of osteosarcoma, and we believe it will be incorporated in the preoperational treatment regime of this type of cancer.

LILIYA KAZANTSEVA

ON-CHIP COLOURED LIGHT SOURCES UNLEASH THE POWER OF QUANTUM TECHNOLOGIES

Photon sources are one of the most important enablers for the development of quantum technologies, with researchers seeking to control and harness photon properties. The DC FlexMIL project developed flexible on-chip light sources, a significant step forward in this direction.

The DC FlexMIL project, a Marie Skłodowska-Curie Individual Fellowship, focused on the development and control of novel integrated light sources for applications in both classical and quantum technologies. It merged fundamental scientific investigations with recent advances in integrated photonics to reach its goals. The three-year project concluded in December 2018. As a recipient of this individual fellowship, Michael Kues benefitted from the opportunity to spend time at the INRS-EMT in Canada and the University of Glasgow in the UK. The opportunity to share knowledge and ideas with international colleagues was central to the achievements of the project. Currently, he is an appointed professor at the Leibniz University of Hannover, continuing his research on compact on-chip optical quantum systems.

MICRORING RESONATOR BASED LASER

The first notable achievement of DC FlexMIL was the development of a mode-locked laser with a microring resonator as its cavity, generating very narrow-bandwidth laser pulses.

“Most mode-locking techniques introduced in the past mainly aimed at creating increasingly shorter pulses with broader spectra. Little progress has been achieved so far in producing mode-locked lasers generating stable narrow-bandwidth nanosecond pulses,” notes Kues.

Capitalising on recent advances in nonlinear micro-cavity optics, the project team successfully produced the first pulsed passively Kerr mode-locked nanosecond laser, with a record-low and transform-limited spectral width of 105 MHz – more than 100 times lower than any mode-locked laser to date. With a compact architecture, modest power requirements, and the unique ability to resolve the full laser spectrum in the radio-frequency domain, the laser paves the way towards full on-chip integration for novel sensing and spectroscopy implementations.

ON-CHIP LIGHT SOURCES EMITTING SINGLE PHOTONS

Along with using the microring resonator for classical laser concepts, the project team also used it in the quantum domain to realise novel light sources at the single photon level, opening up a new avenue of investigation.

“We used the microring resonator to generate – through the non-linear frequency conversion process of four-wave mixing – on-chip quantum frequency combs. These light sources comprised of many equally-spaced frequency modes enabled us to generate multiple entangled qubit states of light,” explains Kues.

This is the first time anyone has demonstrated the simultaneous onchip generation of multi-photon entangled qubit states. Until now, integrated systems developed by other research teams had only succeeded in generating individual two-photon entangled states on a chip.

“Multiple qubits can be linked in entangled states, where the manipulation of a single qubit changes the entire system, even if individual qubits are physically distant. This property is the basis for quantum information processing, aiming towards building superfast quantum computers and transferring information in a completely secure way,” adds Kues.

ENTANGLED PHOTON STATES OF HIGHER DIMENSIONS

Another important aspect of the project research was the dimensions of the entangled system. A qubit is two-dimensional system, for example a 0 and a 1. Instead of being limited to just two levels, qudits (with a ‘d’) can exist in more levels, 0, 1, 2, and 3 for example. For every added level, the processing ability of a qudit increases. But how is this relevant to quantum computing?

To reach the processing capabilities required for meaningful quantum applications, it is necessary to scale up the information content we can process. “Recent research claims that 50 qubits are needed for realising practical quantum computations. However, a large number of qubits does not necessarily translate to a leap in computational capability. There are issues with how well connected those qubits are,” outlines Kues. Therefore, instead of increasing the number of qubits, another research avenue consists in maintaining a smaller number of qudits, each able to hold a greater range of values.

By exploiting the frequency degree of a photon, the researchers succeeded in generating entangled qudit states in an integrated format, where the photon is in a superposition of many colours. With each frequency (colour) representing a dimension, the team reported the realisation of a quantum system with 100 dimensions using two entangled qudits each with 10 levels.

An attractive feature of this achievement is that it was done using inexpensive, commercially available components. This means that the technology can be easily adapted by other researchers, potentially heralding a period of very rapid development in the field.